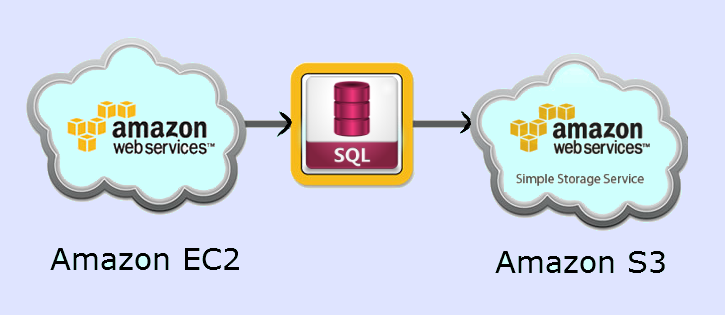

Ensuring the safety of your data is paramount, and automating backups is a key strategy for achieving this. In this blog, we’ll walk through the process of automating MySQL database backups on an AWS EC2 instance and storing them in an S3 bucket. This setup will ensure that our data is backed up regularly and securely.

Prerequisites

Before we begin, make sure you have the following:

- An AWS account

- IAM user with appropriate permissions on EC2 and S3 bucket.

- An EC2 instance running Ubuntu or a similar Linux distribution

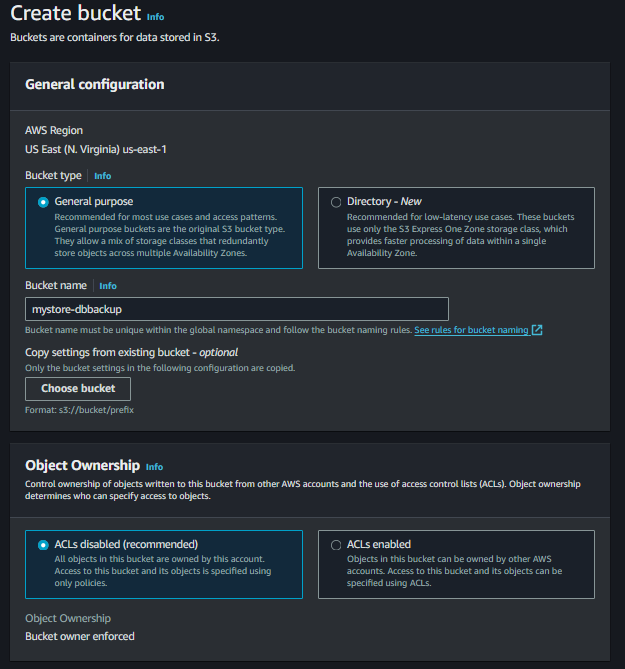

Step 1: Create an S3 Bucket and LifeCycle rule

Create an S3 bucket where the backups will be stored.

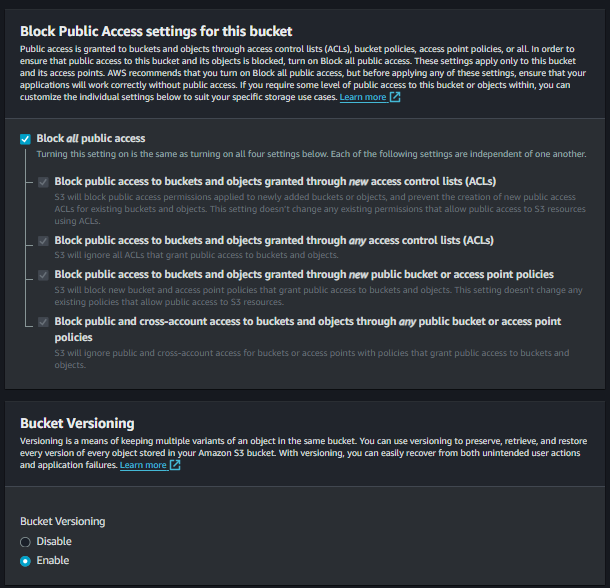

Make sure versioning is enabled as going forward we have to apply lifecycle policy on our bucket.

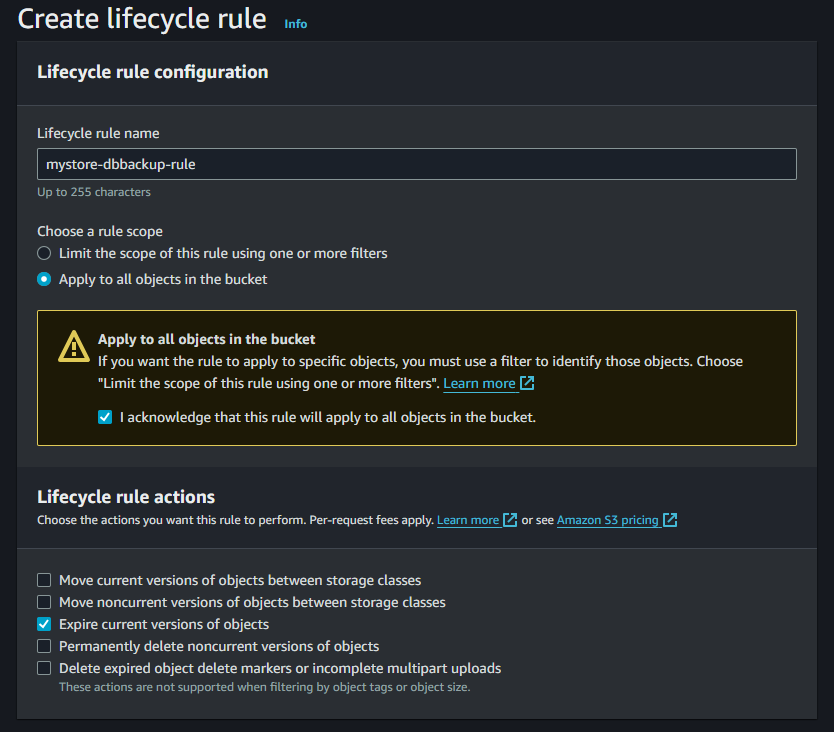

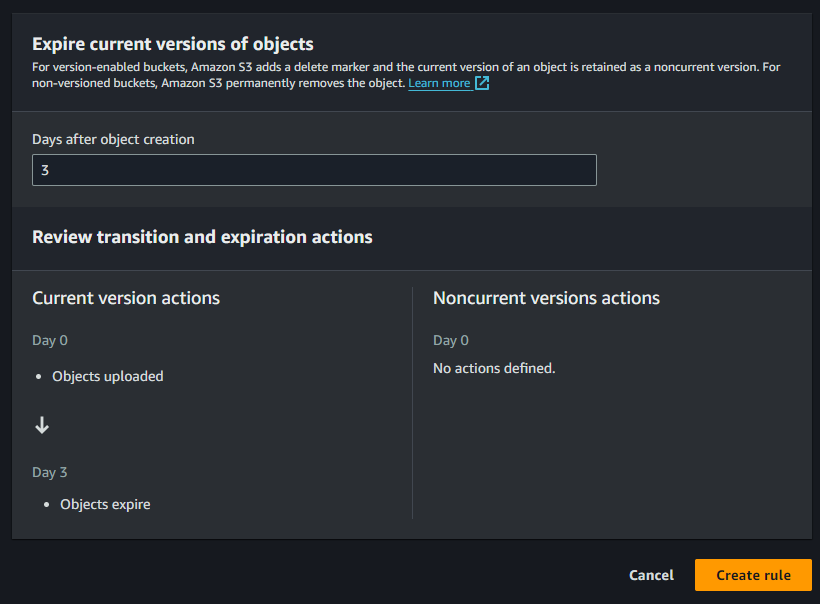

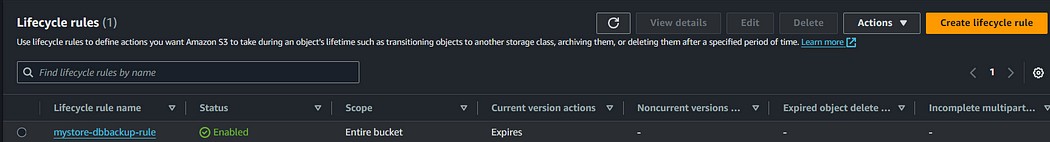

Once bucket gets created, navigate to management and create lifecycle rule.

- Give name to your lifecycle rule

- Choose “Apply to all objects in the bucket” rule scope

- Lifecycle rule options — Expire current versions of objects.

You can set the scope and actions as per your requirements.

- Set expiration days to 3 as we will be storing backups of last 3 days.

Click create rule and it’s done.

Step 2: Attach an IAM Role to the EC2 Instance

Let’s create an IAM role with the necessary permissions for EC2 to write to our S3 bucket.

Create new role for EC2 service and attach below policy with the neccessary S3 permissions.

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": [

"s3:PutObject",

"s3:GetObject",

"s3:ListBucket"

],

"Resource": [

"arn:aws:s3:::mystore-dbbackup",

"arn:aws:s3:::mystore-dbbackup/*"

]

}

]

}

replacing mystore-dbbackup with your bucket name:

Attach the created IAM role to the EC2 instance and now our server will be able to communicate with the created S3 bucket.

Step 3: Install MySQL on EC2 Instance

If MySQL is not already installed on EC2 instance, you can install it using the following commands:

sudo apt-get update

sudo apt-get install mysql-server

Once installed, let’s secure our MySQL installation:

sudo mysql_secure_installation

Step 4: Create a Database and Table with Sample Data

Log in to the MySQL server and create a user specifically for performing backups:

sudo mysql -u root

Then run the following SQL commands to create the database, a table, and insert some sample data:

CREATE DATABASE mystore;

USE mystore;

CREATE TABLE product (

id INT AUTO_INCREMENT PRIMARY KEY,

name VARCHAR(100),

price DECIMAL(10,2)

);

INSERT INTO product (name, price) VALUES ('Product1', 10.00), ('Product2', 20.00), ('Product3', 30.00);

Step 5: Create a Database User for Backups

Create a user specifically for performing backups:

CREATE USER 'backupuser'@'localhost' IDENTIFIED BY 'User@1234';

GRANT PROCESS, RELOAD, LOCK TABLES, SHOW DATABASES, REPLICATION CLIENT ON *.* TO 'backupuser'@'localhost';

FLUSH PRIVILEGES;

Modify the above commands as per your database details.

Our database setup is completed and now let’s move forward to the next step of writing backup script.

Step 6: Write a Backup Script

Create a file backup_script.sh in the EC2 instance that will handle the database dump.

#!/bin/bash

# MySQL database credentials

DB_USER="backupuser"

DB_PASS="User@1234"

DB_NAME="mystore"

# Directory to store backups temporarily

BACKUP_DIR="/tmp/mysql_backups"

mkdir -p $BACKUP_DIR

# Timestamp for backup filename

TIMESTAMP=$(date +"%Y%m%d%H%M%S")

# S3 bucket name

S3_BUCKET="mystore-dbbackup"

# Dump MySQL database

BACKUP_FILE="$BACKUP_DIR/$DB_NAME-$TIMESTAMP.sql"

mysqldump -u$DB_USER -p$DB_PASS $DB_NAME > $BACKUP_FILE

# Upload to S3

aws s3 cp $BACKUP_FILE s3://$S3_BUCKET/

# Optional: Remove the local backup file after upload

rm $BACKUP_FILE

Add your database connection details and S3 bucket name in the above file.

Make the script executable:

chmod +x backup_script.sh

Step 7: Schedule the Backup Script with Cron

Edit the crontab to schedule the backup script to run every 3 hours:

crontab -e

Add the following line to the crontab file:

0 */3 * * * /home/ubuntu/backup_script.sh

Replace /home/ubuntu/backup_script.sh with the actual path to your script.

Great! We are done with our entire setup and now it’s time to test the things.

Step 8: Verify the setup

To ensure everything is set up correctly:

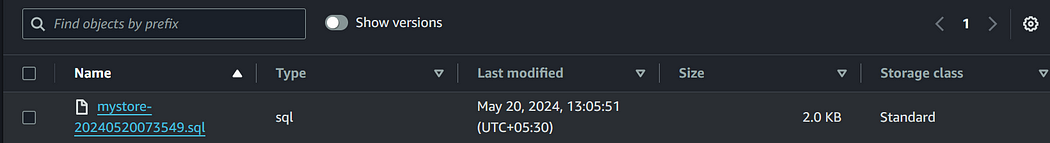

- Manual Run: Execute the backup script manually and check if the backup file is created and uploaded to the S3 bucket.

./backup_script.sh

2. Check Logs: Look at the cron logs to verify the job runs as scheduled. You can check cron logs typically at /var/log/syslog or /var/log/cron.

grep CRON /var/log/syslog

We can see that our script has been executed successfully and it pushed the MySQL back dump into our S3 bucket 🥳.

Conclusion

In this blog, we’ve automated the process of backing up our MySQL database from an EC2 instance to an S3 bucket. This setup ensures that our data is backed up regularly and can be restored in case of any data loss or failure.

I hope you found this content informative and enjoyable. For more insightful blogs and updates, consider following and clicking the 👏 button below to show your support. Happy coding! 🚀

Thank you for reading! 💚